Difference between revisions of "Nekrocemetery"

Spideralex (talk | contribs) |

|||

| (11 intermediate revisions by one other user not shown) | |||

| Line 4: | Line 4: | ||

== Archives and libraries contents == | == Archives and libraries contents == | ||

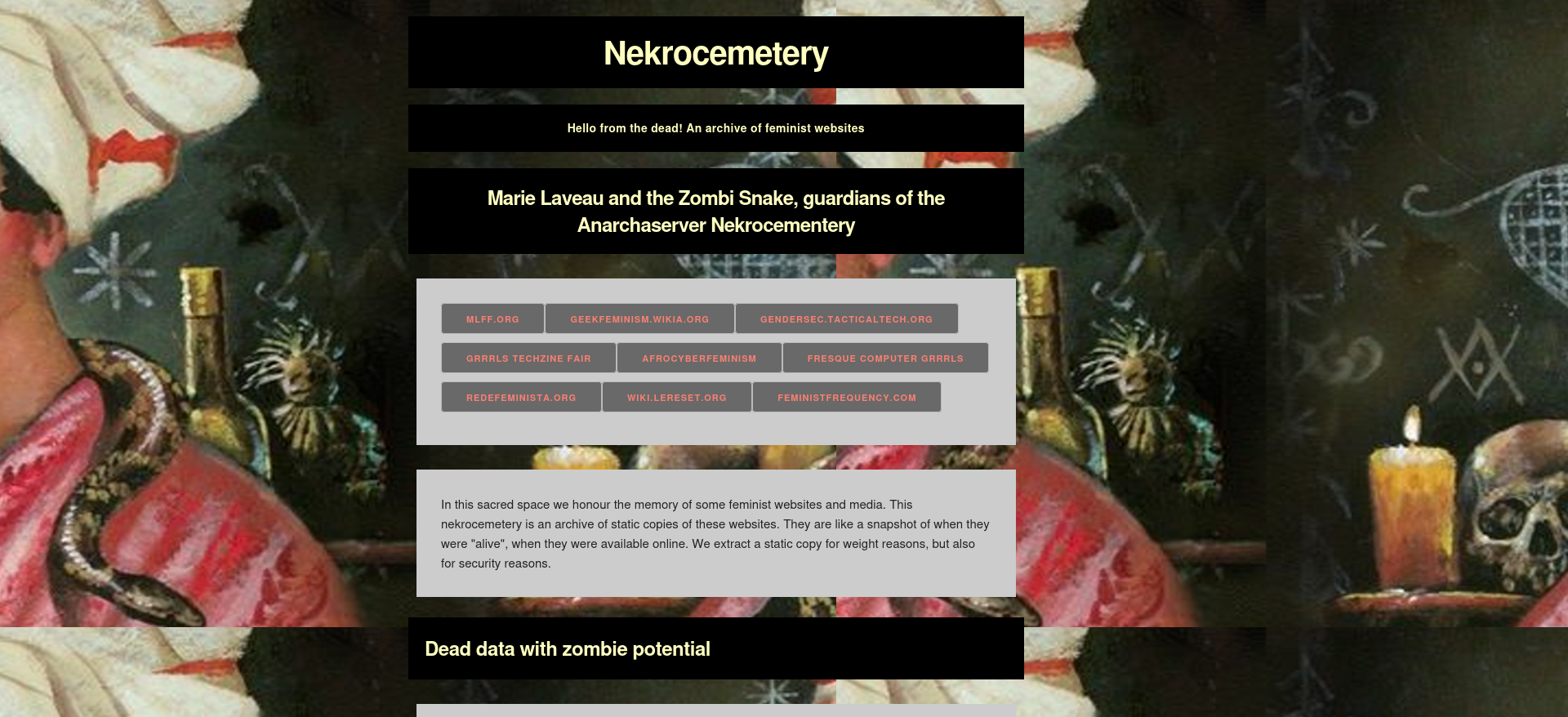

* '''Marie Laveau and the Zombi Snake, guardians of the [https://nekrocemetery.anarchaserver.org/ Anarchaserver Nekrocementery]''' - "In this sacred space we honour the memory of some feminist websites and media. This nekrocemetery is an archive of static copies of these websites. They are like a snapshot of when they were "alive", when they were available online. We extract a static copy for weight reasons, but also for security reasons." | |||

* '''[https://archive.org The Internet Archive]''', a 501(c)(3) non-profit - "is building a digital library of Internet sites and other cultural artifacts in digital form. Like a paper library, we provide free access to researchers, historians, scholars, people with print disabilities, and the general public. Our mission is to provide Universal Access to All Knowledge." | |||

** '''[https://web.archive.org/ The waybackmachine]''' - "Search the history of more than 1 trillion web pages on the Internet." | |||

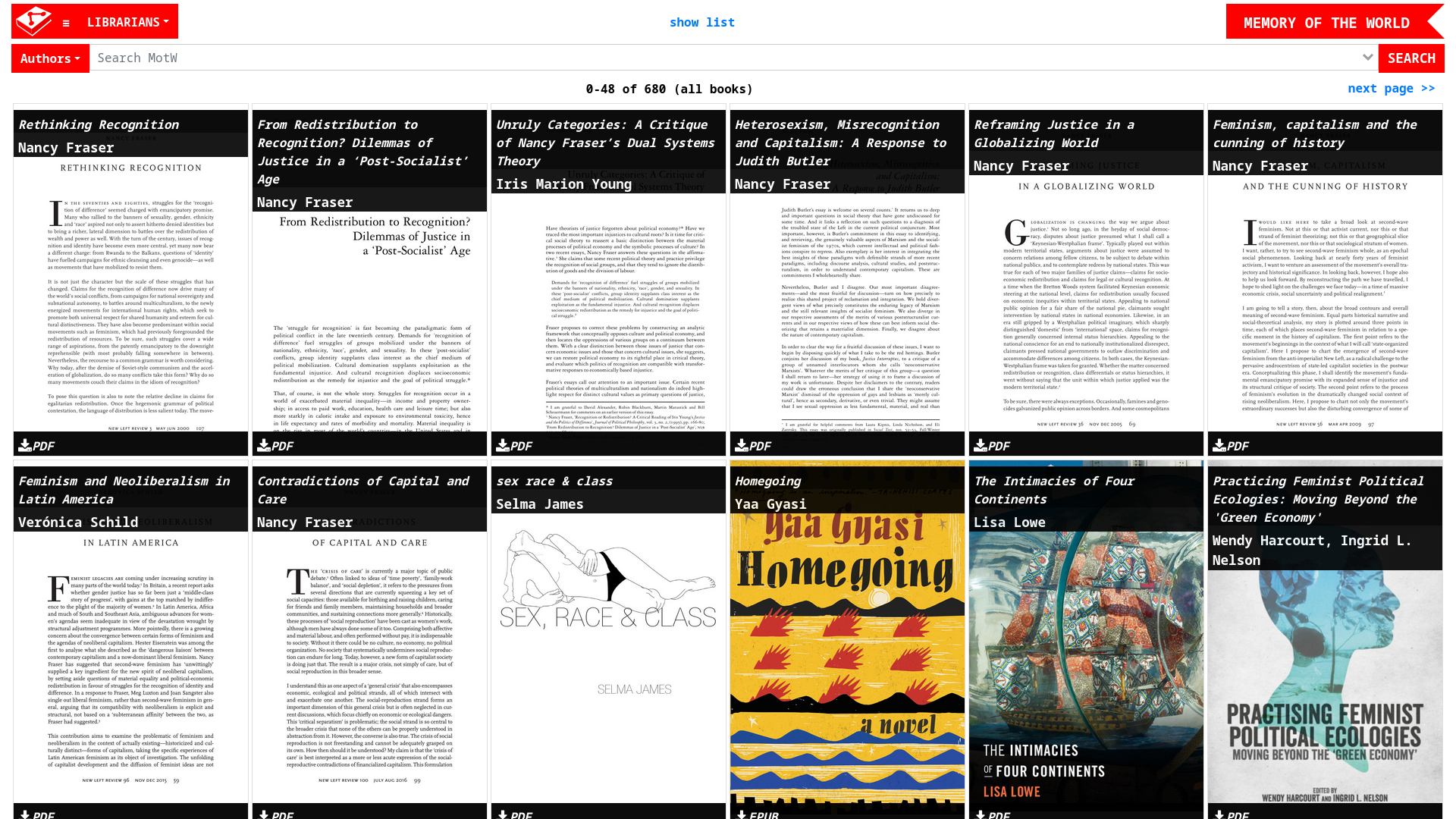

* '''[https://www.memoryoftheworld.org/ Memory of the world]''' - "library: Free Libraries for Every Soul: Dreaming of the Online Library" | |||

** '''[https://feminism.memoryoftheworld.org/#/books/ a feminist pirate library]''' | |||

* Founded in 1996, '''[https://ubu.com/ UbuWeb]''' is a pirate shadow library consisting of hundreds of thousands of freely downloadable avant-garde artifacts. | |||

* '''[https://annas-archive.org/ Anna’s Archive]''' - "The largest truly open library in human history. 📈 61,344,044 books, 95,527,824 papers — preserved forever" | |||

* [https://libgen.la/ Library Genesis+] - "20.10.2025 Added bibliography search in local databases of the Worldcat.org and the Russian State Library" | |||

* '''[https://sci-hub.se/ Sci-Hub]''' coverage is larger than 90% for all papers published up to 2022 in major academic outlets. | |||

* Official '''[https://z-library.sk/ Z-Library Project]''' - Free Instant Access to eBooks and Articles | |||

* '''[https://rhizome.org Rhizome]''' champions born-digital art and culture through commissions, exhibitions, scholarship, and digital preservation. | |||

** '''[https://rhizome.org/tags/preservation/ Rhizome’s digital preservation program]''' supports ongoing access to born-digital culture - "In addition to conserving individual artworks and other cultural artifacts, the Rhizome preservation team develops infrastructure, researches and pilots new preservation methods, and engages with open-source software projects." | |||

* A [https://en.wikipedia.org/wiki/List_of_web_archiving_initiatives list of web archiving initiatives] from wikipedia | |||

== how to make a static snapshot of a to be a zombie website for the nekrocemetery of anarchaserver== | |||

[[File:Screenshot 2025-11-29 at 00-14-26 Memory of the World Library.png]] | |||

== Tools to archive and consult internet contents == | |||

=== Archive web contents === | |||

* To create an [https://dev.to/rijultp/how-to-use-wget-to-mirror-websites-for-offline-browsing-48l4 offline mirror of a website] using wget, you can use the command (replace samplesite.org by your site url) : | |||

wget --mirror --convert-links --adjust-extension --page-requisites --no-parent --wait=2 --limit-rate=200k --domains=zoiahorn.anarchaserver.org --reject-regex=".*(ads|social).*" -P ./specficwebsite https://zoiahorn.anarchaserver.org/specfic/ | |||

* [https://www.httrack.com HTTrack] is a free (GPL, libre/free software) and easy-to-use offline browser utility. | |||

httrack --mirror --robots=0 --stay-on-same-domain --keep-links=0 --path /home/machine/httrackspecfic --max-rate=10000000 --disable-security-limits --sockets=2 --quiet https://zoiahorn.anarchaserver.org/specfic/ -* +zoiahorn.anarchaserver.org/* | |||

* [https://webrecorder.net/browsertrix/ webrecorder.net tools "browsertrix"]: a web archiving platform combining a bunch of Webrecorder tools into one place in your browser | |||

** You can use [https://crawler.docs.browsertrix.com/ browsertix-crawler] (the core component of the browsertrix service) similar to wget on the command line, but using Docker. It might be helpful if some of the sites you are archiving load resources with JavaScript. | |||

** Interactive Archiving In Your Browser : [https://webrecorder.net/archivewebpage/ Archive websites] as you browse with the ArchiveWeb.page Chrome extension or standalone desktop app. | |||

* the [https://archiveweb.page/ Archiveweb.page] extension for Chrome, which allows you to record a web page as you visit it, save it to your browser and play it back in the browser later | |||

* [https://conifer.rhizome.org/ Conifer] is Rhizome's premier user-driven hosted web archiving and hosted service to easily create and share high-fidelity, fully interactive copies of almost any website. | |||

* When there is No Internet, [https://kiwix.org/ there is Kiwix] - "Access vital information anywhere. Use our apps for offline reading on the go or the Hotspot in every place you want to call home. Ideal for remote areas, emergencies, or independent knowledge access." | |||

** Kiwix can give offline access to [https://library.kiwix.org/#lang=eng&category= 1033 books] like Wikipedia, Openstreetmap wiki, Ifixit, and many more website and knowledges ressources | |||

See this nice resource : The webzine about [https://zinebakery.com/assets/homemade-zines/bakeshop-zines/DIYWebArchiving-DombrowskiKijasKreymerWalshVisconti-V4.pdf DIY Web Archiving] | |||

Also this collection of tools and ressources : [https://github.com/iipc/awesome-web-archiving?tab=readme-ov-file Awesome Web Archiving] - "is the process of collecting portions of the World Wide Web to ensure the information is preserved in an archive for future researchers, historians, and the public." | |||

And a [https://github.com/datatogether/research/tree/master/web_archiving comparison of web archiving software] | |||

[[File:Conifer.png]] | |||

=== Documenting Digital Attacks / contents === | |||

* The '''[https://digitalfirstaid.org/documentation/ Digital First Aid kit]''' Documenting digital attacks can serve a number of purposes. Recording what is happening in an attack can help you | |||

* '''[https://pts-project.org/colander-companion/ Colander Companion]''' - "Collect web content as evidence for your Colander investigations" | |||

* '''[https://mnemonic.org/ Mnemonic]''' works globally to help human rights defenders effectively use digital documentation of human rights violations and international crimes to support advocacy, justice and accountability. | |||

== How to make a static snapshot of a to be a zombie website for the nekrocemetery of anarchaserver== | |||

https://ressources.labomedia.org/archiver_et_rendre_statique_un_wiki | https://ressources.labomedia.org/archiver_et_rendre_statique_un_wiki | ||

| Line 28: | Line 71: | ||

=== restart apache inside container === | === restart apache inside container === | ||

<code> systemctl restart apache2</code> | <code> systemctl restart apache2</code> | ||

[[File:Screenshot 2025-11-29 at 01-05-51 Nekrocemetery.png]] | |||

Latest revision as of 10:14, 29 November 2025

Nekrocemetery or how to archive an internet or web content

How to archive an internet / web content that is going to disappear ?

Archives and libraries contents

- Marie Laveau and the Zombi Snake, guardians of the Anarchaserver Nekrocementery - "In this sacred space we honour the memory of some feminist websites and media. This nekrocemetery is an archive of static copies of these websites. They are like a snapshot of when they were "alive", when they were available online. We extract a static copy for weight reasons, but also for security reasons."

- The Internet Archive, a 501(c)(3) non-profit - "is building a digital library of Internet sites and other cultural artifacts in digital form. Like a paper library, we provide free access to researchers, historians, scholars, people with print disabilities, and the general public. Our mission is to provide Universal Access to All Knowledge."

- The waybackmachine - "Search the history of more than 1 trillion web pages on the Internet."

- Memory of the world - "library: Free Libraries for Every Soul: Dreaming of the Online Library"

- Founded in 1996, UbuWeb is a pirate shadow library consisting of hundreds of thousands of freely downloadable avant-garde artifacts.

- Anna’s Archive - "The largest truly open library in human history. 📈 61,344,044 books, 95,527,824 papers — preserved forever"

- Library Genesis+ - "20.10.2025 Added bibliography search in local databases of the Worldcat.org and the Russian State Library"

- Sci-Hub coverage is larger than 90% for all papers published up to 2022 in major academic outlets.

- Official Z-Library Project - Free Instant Access to eBooks and Articles

- Rhizome champions born-digital art and culture through commissions, exhibitions, scholarship, and digital preservation.

- Rhizome’s digital preservation program supports ongoing access to born-digital culture - "In addition to conserving individual artworks and other cultural artifacts, the Rhizome preservation team develops infrastructure, researches and pilots new preservation methods, and engages with open-source software projects."

- A list of web archiving initiatives from wikipedia

Tools to archive and consult internet contents

Archive web contents

- To create an offline mirror of a website using wget, you can use the command (replace samplesite.org by your site url) :

wget --mirror --convert-links --adjust-extension --page-requisites --no-parent --wait=2 --limit-rate=200k --domains=zoiahorn.anarchaserver.org --reject-regex=".*(ads|social).*" -P ./specficwebsite https://zoiahorn.anarchaserver.org/specfic/

- HTTrack is a free (GPL, libre/free software) and easy-to-use offline browser utility.

httrack --mirror --robots=0 --stay-on-same-domain --keep-links=0 --path /home/machine/httrackspecfic --max-rate=10000000 --disable-security-limits --sockets=2 --quiet https://zoiahorn.anarchaserver.org/specfic/ -* +zoiahorn.anarchaserver.org/*

- webrecorder.net tools "browsertrix": a web archiving platform combining a bunch of Webrecorder tools into one place in your browser

- You can use browsertix-crawler (the core component of the browsertrix service) similar to wget on the command line, but using Docker. It might be helpful if some of the sites you are archiving load resources with JavaScript.

- Interactive Archiving In Your Browser : Archive websites as you browse with the ArchiveWeb.page Chrome extension or standalone desktop app.

- the Archiveweb.page extension for Chrome, which allows you to record a web page as you visit it, save it to your browser and play it back in the browser later

- Conifer is Rhizome's premier user-driven hosted web archiving and hosted service to easily create and share high-fidelity, fully interactive copies of almost any website.

- When there is No Internet, there is Kiwix - "Access vital information anywhere. Use our apps for offline reading on the go or the Hotspot in every place you want to call home. Ideal for remote areas, emergencies, or independent knowledge access."

- Kiwix can give offline access to 1033 books like Wikipedia, Openstreetmap wiki, Ifixit, and many more website and knowledges ressources

See this nice resource : The webzine about DIY Web Archiving

Also this collection of tools and ressources : Awesome Web Archiving - "is the process of collecting portions of the World Wide Web to ensure the information is preserved in an archive for future researchers, historians, and the public."

And a comparison of web archiving software

Documenting Digital Attacks / contents

- The Digital First Aid kit Documenting digital attacks can serve a number of purposes. Recording what is happening in an attack can help you

- Colander Companion - "Collect web content as evidence for your Colander investigations"

- Mnemonic works globally to help human rights defenders effectively use digital documentation of human rights violations and international crimes to support advocacy, justice and accountability.

How to make a static snapshot of a to be a zombie website for the nekrocemetery of anarchaserver

https://ressources.labomedia.org/archiver_et_rendre_statique_un_wiki

e.g MLFF - aka media liberation front/feminism

sudo lxc-attach nekrocemetery

wget --mirror --convert-links --html-extension -o log https://www.mlff.org

Be sure to put the good url, not one that will be redirected (from http://mlff.org to http://www.mlff.org for example) else the archive will only contain one file

COPY zombie site under /var/www/nekrocemetery/

- https://nekrocemetery.anarchaserver.org/mlff/

- https://nekrocemetery.anarchaserver.org/gendersec/

- https://nekrocemetery.anarchaserver.org/geekfeminism/

configure dns for a specific zombie site, e.x gendersec

vi /etc/apache2/sites-available/gendersec.conf

vi /etc/apache2/sites-available/ gendersec-le-ssl.conf

restart apache inside container

systemctl restart apache2